Non-visually-derived mental objects tax "visual" pointers

Non-visually-derived mental objects tax "visual" pointers

Yu, X.; Türk, U.; Dods, A.; Tian, X.; Lau, E.

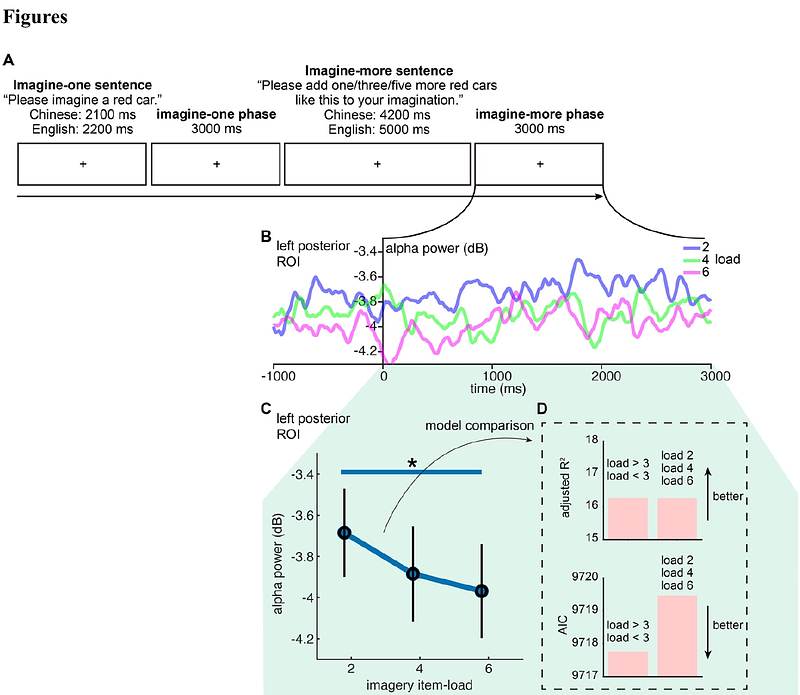

AbstractIn daily life, we can construct mental objects from various modalities: we can create a cat in the mind from visual experience, or from what someone says or signs linguistically. Do mental objects derived from different input sources share the same set of \"slots\" (or pointers, indexicals)? Employing a novel imagine-one-then-more paradigm in a large EEG sample (N = 52) across two recording sites, we found that increasing the number of imagined objects derived from a non-visual source -- auditory speech during eye closure -- led to greater suppression of left posterior alpha power. This observation closely mirrors effects previously observed for visually-derived representations. Our results support the existence of a domain-general pointer system that functions as a modality-independent object register in moment-to-moment cognition.